Context

This article seeks to go over the details of configuring an AWS Virtual Private Cloud (VPC) to enable the use of centralized gateway IDS/IPS solutions in the cloud as we do today in the virualization world.

As a part of this research, several security solutions available in the AWS marketplace were analyzed to identify existing techniques that implement some form of a centralized network IDS/IPS system. Below are some of the popular findings:

- Sophos UTM 9: Provides host based security support software with the following features:

- Web Server Protection

- Web Protection

- VPN support

- CohesiveFT: The VNS-3 (Virtual Network Server) is available at the AWS Marketplace that facilitates in the creation of an overlay network to gain control of addressing, topology, protocols and encrypted communication between virtual infrastructure and cloud computing centers. Also provides support for IPSec tunneling similar to site to site VPN to ensure single LAN connectivity between environments.

- Their solutions are mainly catered towards making clouds and virtual environment interoperable.

- The centralized IDS can be ensured by routing all traffic to on-prem via the IPSec tunnel and use existing gateway solutions to monitor threats and attacks.

- Cisco ASA: Cisco’s ASA series of routers are designed to provide point to point VPN access to individual compute instances in the cloud. Taking the scenario of a VPC deployment, establishing centralized security in this case implies setting up VPN tunnels to the corporate network of the org from AWS and this has to be done on a per instance basis.

While products such as above provide capabilities like VPN/IPSec and single notion of the network topology across clouds, we do not see capabilities provided for a centralized IDS/IPS solution within AWS cloud analogous to the on-premise solutions like VMWare.

In order to determine the feasibility of the solution in AWS VPC, a prototype was developed with a VPC containing two subnets. Further details are discussed below.

Prototype Setup

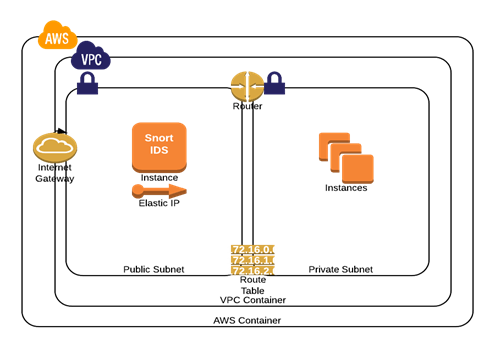

A centralized NIDS solution must have all traffic run through it to ensure efficient enforcements of policies and detecting attacks and malicious traffic. Within the AWS infrastructure, a VPC with the following configuration was deployed.

The configuration can easily be deployed using the standard VPC starup wizard in AWS.

In this design a VPC configuration with 1 public and 1 private subnet is deployed. The public subnet has a compute instance with an associated elastic IP which serves as a 'router' for the rest of the internal deployments.

The internet gateway as such today is fairly limited in its functionality and does not have an capabilities that are required to support an IDS/IPS system.

The route table is modified to ensure that the private subnet cannot talk to the outside world without going through the public subnet. In essence the Snort instance acts as a NAT for rest of the network inside the VPC thus making this the ideal place to deploy NIDS capabilities.

The instances in the private subnet have lighttpd installed on them with a test page deployed on port 80. For this prototype we assume the following:

- Analyze only port 80 traffic i.e. HTTP traffic

- Only one web server is set up in the private subnet with lighttpd.

IPTables Configuration

AWS NAT instance supports the outbound connections from the private subnets to the internet via the Internet Gateway (IGW). Out of the box, it does not allow inbound connections from the outside world into the private subnet. In order to have the NAT instance monitor all traffic we would need to convert the NAT capabilities to support both inbound and outbound connections.

For this prototype we have the following two scenarios from the NAT instance:

- Inbound

The end user should be able to make a HTTP request to the EIP of the NAT instance. The NAT instance should automatically route it to the web server and establish a session with the response from the server being routed back to the user.

- Outbound

The private subnet running the web server instance should be able to access the internet through the NAT as before without being redirected or blocked.

In order to support this we need to make changes to the iptable rules.

Default Setting

Once the VPC is setup, the NAT instance will have the following iptable configuration supporting the NAT behavior.

$> sudo service iptables status

.....

.....

.....

Chain OUTPUT (policy ACCEPT)

num target prot opt source destination

Chain POSTROUTING (policy ACCEPT)

num target prot opt source destination

1 MASQUERADE all -- 10.0.0.0/16 0.0.0.0/0

The above rule ensures that all outbound traffic will have its source IP masqueraded if its generated within the VPC subnet 10.0.0.0/16 range.

Inbound connection forwarding

We add another rule to this configuration to support inbound connections to be routed to the web server that is deployed in the private subnet. (IP: 10.0.1.113:80)

$> sudo iptables -t nat -A PREROUTING -p tcp \! -s 10.0.0.0/16 --dport 80 -j DNAT --to-destination 10.0.1.113:80

The above rule ensures that all traffic that is not from the VPC subnet (10.0.0.0/16) coming on port 80 must be routed to the web server at 10.0.1.113:80. This enables the NAT instance to monitor all traffic inbound and outbound to/from the Web Server. The rule also ensures that if there are outbound connections from the private subnet to the internet, like say updating a linux package etc., we do not apply the NAT rule for that traffic as it was meant to pass through.

As the packets are sent out the POSTROUTING rule ensures that the source IP in those packets are masqueraded to use the NAT/EIP address thus ensuring that the internal private addresses are not revealed to the outside world.

If we do not specifically add the VPC subnet range exception in the above rule, the iptables configuration would generate a loop where all outbound traffic from the private subnet would be redirected back to itself at port 80.

Final IPtables rule set looks like:

[ec2-user@ip-10-0-0-58 ~]$ sudo service iptables status

Table: filter

Chain INPUT (policy ACCEPT)

num target prot opt source destination

Chain FORWARD (policy ACCEPT)

num target prot opt source destination

Chain OUTPUT (policy ACCEPT)

num target prot opt source destination

Table: nat

Chain PREROUTING (policy ACCEPT)

num target prot opt source destination

1 DNAT tcp -- !10.0.0.0/16 0.0.0.0/0 tcp dpt:80 to :10.0.1.113:80

Chain OUTPUT (policy ACCEPT)

num target prot opt source destination

Chain POSTROUTING (policy ACCEPT)

num target prot opt source destination

1 MASQUERADE all -- 10.0.0.0/16 0.0.0.0/0

Once we have this setup we can use the browser to hit the EIP of the NAT instance and receive the HTTP response from the webserver deployed in the private instance. We should also be able to test the outbound connection scenario from the private instance.

Result

With this set up, we would now be able to deploy an IDS/IPS at the NAT instance to tap into all traffic coming in and out of the VPC. Next steps would be to deploy Snort on this instance and configure it to behave like a simple IDS system.

Some of the questions that come up as a result of this research are as follows:

- How do cloud infrastructure service consumers utilize resources in AWS or other similar clouds to deploy their multi-tier applications? Is there a need for centralized network IDS/IPS solutions today?

- How is this trend going to change in the coming years? Is the lack of a centralized network security solution forming a hurdle today for customers to migrate from virtual infrastructure solutions to the cloud?

- Today we can implement a network IDS/IPS solution by enforcing a VPN tunnel to the VPC in AWS cloud ensuring all traffic is monitored by the corporate network via the VPN. This would enable organizations to set up IDS/IPS monitoring solutions in the traditional way in front of their internet gateway. Does this model scale well in today? Would this scale in environments where large workloads would be moved to the cloud tomorrow?