|

In the previous article, we discussed how to secure individual NT hosts in preparation for placing them on a network connected to the Internet. We discussed the security in terms of layering at the host level. At this point, we'll begin to add to those layers by the way that we control, monitor, and filter communications. One of the main intents of this article is to strictly define communication parameters and protocols down to a few essential ones and then log those remaining to a very full extent. We also want to discuss exactly when, where, how, and in which direction(s) we want those protocols to flow.

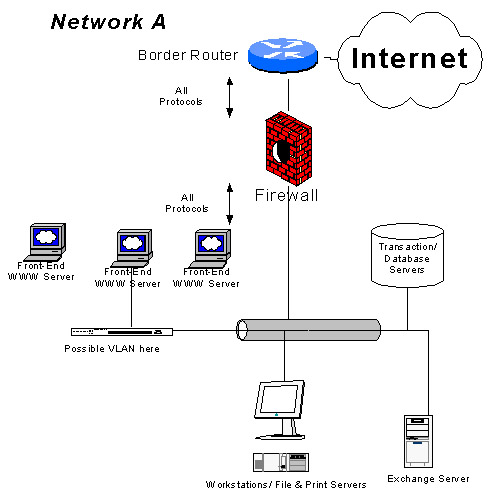

Here are a few common network designs. Try comparing these designs to your own existing designs. We'll call the first example Network A. The lines with the arrow points on both ends show how traffic in general flows.

This layout is a disaster waiting to happen. It's also one of the most common designs that I see implemented in the real world (especially among businesses with IT understaffing). It's popular because it's inexpensive and easiest to implement. Traffic is free to flow in and out of the internal segments because that's where the web, mail, and DNS servers are.

People generally implement this design with private addressing on all machines that don't need to be accessible from the Internet. For the servers that do need to be accessed from the Internet, the firewall generally NAT's them an address. PAT's are done for workstations. There might be a VLAN system implemented to split the workstations from the publicly accessible servers. Common misconceptions are that the servers will be the only machines available for compromise because they are the only ones accessible with real addresses. The VLAN system supposedly gives an extra measure of protection by only allowing certain addresses across to the transaction databases, workstations and mail servers. The web servers are administered from the internal workstations from across the LAN.

The problem here is that traffic is not really segmented at all. One may argue that the switch (if implemented) provides good security segmentation. It doesn't. In the interest of not getting into the heavy specifics as to why in this article, I will provide this link to a SANS article on the topic and leave this line of reasoning: switches aren't designed to be secure objects. Here's the link: http://www.sans.org/infosecFAQ/switch_security.htm

If I (as an attacker) am able to compromise a web server or some other publicly accessible device, it will be very easy to break into the rest of the network. The firewall no longer protects the internal servers and workstations because it allows traffic from attackers to the same segment as all other devices. If a web server can talk to a workstation, or a workstation can talk to a web server, then the traffic that flows between them can be attacked and then the workstation can be attacked. My strategy will be to use the web server as a launching point for attacks on the rest of the networks. Because there is no segmentation, all internal resources are now available for me to attack. Institutions that use this design are characteristically not security-savvy and pay little attention to internal security, making an attack on the rest of the network from the web server even more plausible and easy. Even if the inbound traffic is limited to ports 80 and 443, exploits such as RDS and, more recently, Unicode, which move over ports that have to be open for business will always be around with more like them to come.

Furthermore, cleaning up after a compromise can be a real mess. Generally speaking, if a machine on a network segment has been compromised, all machines on that network should be considered compromised or tainted and need to be rebuilt. If the IT staff has no idea how long it has been since the attacker began compromising machines, backups could and should be considered tainted... and then the question is: when was the last "clean" backup done? Such a problem either leaves the IT staff to take the risk of only cleaning up some machines, leaving a risk of a backdoor still available to the attacker on some workstation that wasn't reloaded. The alternative is to take staff offline for however long it takes to completely restore the infrastructure.

Network A could be built on a bit by adding an IDS or some other sort of passive monitoring device. However, an organization that will design a network this way in the first place generally doesn't have the resources or the skill available to purchase or implement such a system correctly. It's still a bad design. As a last little point, email headers in this design would give out internal addresses, and there are a host of other bad things that come with not having a mail relay.

Let's move on to Network B.

Another common network design, Network B has some big advantages over Network A, but there are still issues.

This design is better than Network A in that the web, email, and other such publicly available machines are now connected on a different segment. In this situation, it is more common to see things like mail relays and an IDS set up. Generally, the firewall is configured to allow only certain IP addresses using only certain protocols from the outside network into the DMZ, which is a very good thing. The IDS also provides a good way to monitor and respond to attacking segments, but must be configured and attended properly.

The two major problems with this design are traffic flow and the location of the Transaction/Database Servers. Allowing traffic to flow from the DMZ into the internal network or from the internal network to the DMZ is not good, especially if all protocols are allowed. The purpose of a DMZ is to separate the publicly available systems from the internal systems. If ports are open between the two segments, then there's not too much improvement from Network A. A compromised server in the public segment still poses a good risk for compromising the internal network as well. Consider removing the flow of traffic between the DMZ and internal segment.

The Transaction/Database Servers are also generally a prime target. If they are placed on the DMZ (even without having a publicly available address), then a compromised web server could very well mean a good chance for a compromised database server. Placing the database servers on the internal segment doesn't really improve the situation. Doing so creates traffic into the internal segment, making machines there attackable as well. Even if the protocols that the transaction servers listen to are limited to one or two, the transaction servers can be compromised and used as launching point or account information may be sniffed during login procedures on that zone.

Before we get onto the next design, let's discuss how best to secure communications between transaction servers and front-end web servers. Here's a diagram:

Perhaps the first question that comes to mind after looking at this diagram is, "how do I encrypt the traffic between the two hosts?" I prefer PGPnet. PGPnet is available from Network Associates as part of the PGP package. You may also download a free version from NAI for non-commercial use to test this concept. PGP has a wizard for easy set up of connections that can be determined on an individual IP address basis, or a subnet basis. PGPnet binds to individual NIC's below the application level, so once it is configured the first time, it's done.

Another thing to note is that the front-end web servers should be allowed to initiate connections to the transaction servers; but the transaction servers should not be allowed to initiate connections outside of their network segment. In other words, if data needs to flow between the segments, it should only happen when the front-end server makes a qualified request. The back-end servers should never been giving out information on their own volition. Such activity is commonly a sign that they've been compromised. One of the disadvantages of design B is that there isn't a third zone/network segment available on the firewall to do this unless the back-end servers reside on the internal segment, which would be a bad thing.

Programmatically speaking, it is advisable to make the scripts on your web-servers (the ones that establish the session requests and gather data from the transaction servers) use dynamic logins (generated by the client) as opposed to one hard-coded username and password in the script. Hopefully, the reasons for this are obvious. If not, consider the implications of someone gaining access to read an ASP or CGI script on your front end server with the one password required to access all account information available on the back end. Also, make sure that none of the front-end servers store account information for logins to the back-end for longer than it takes to conduct a session. The account database should reside on the back-end as doing so makes it more difficult for an attacker to gain access.

Let's go on to Network C.

Network C has everything going for it that the others do not. Having discussed design up to this point, it should be easy to understand why. Note how the internal network can't communicate anywhere but out to the Internet, and only then if the internal segment initiates the connection. Keeping communication disabled between the inside network and the DMZ's is critical. Also, note how the DMZ is able to pass SQL traffic into the transaction server segment, but only if the connection is initiated from the DMZ. We are also passing only the necessary protocols both in and out of all segments. Three IDS probes have been placed in this design. Having all three in place is an excellent way to watch the network, but might seem excessive for some. If you are implementing fewer IDS probes, consider the placement of them carefully.

As a piece of advice when implementing IDS products, attend to them well. In order for an IDS to be useful and protective, it needs to be implemented properly and updated consistently. Some of the systems listed here don't detect certain exploits properly out of the box. There are many companies who will implement and monitor an IDS for you. There are some wonderful guides on IDSes and the implementation thereof here on securityfocus.com in the IDS Focus Area.

Now for a discussion on application-based firewalls... The main reason for them: RAT protection. We talked about RATs and how to handle them in the article previous to this one. Suppose, however, that someone finds a way to disable the virus protection on a front end server and then plants a RAT on a certain port (for example, port 80). When that RAT, or the client machine attempting to compromise the network, attempt to connect with each other, they have to find a way to encapsulate the RAT traffic into valid HTTP statements, otherwise, the application firewall will filter the traffic. The same holds true for any other port passing out of the network. RATs, as we discussed in the previous article, are a primary way of compromising an NT network. It is somewhat difficult to write/find a RAT that will work properly while fooling an application-based firewall. Once again, configure the firewall so that only necessary protocols pass through it. As a final statement about application-based firewalls, they can be easily fingerprinted based on the protocols that they are found to pass after port scans. Configuring for only necessary protocols and unloading the modules for the others will help eliminate this issue.

As a side note, some readers may be wondering how web servers can be updated if there can be no connections made between the internal network and the DMZ. This article assumes that the webservers will be in the same geographical location as those doing the updates. Updating and collecting the stats from webservers should be done at the consoles of those servers. Opening conduits in your firewall to do the updates from the internal network is not considered wise (for reasons discussed earlier). Some may argue that if the firewall only allows the internal workstations to initiate connections, that the internal network is safe. Maybe so... but I suggest not taking the risk in the first place. As mentioned before, it is foolish not to consider that the internal web servers will be compromised. If, despite all the layers that have been put in place (which we discussed in article two), an attacker establishes a good foothold on a webserver, he/she has a better opportunity at then getting into the internal network than if there were traffic allowed between the two. Consider the risks carefully before opening a conduit for that NetBIOS or front page server extension traffic. For those interested in opening that conduit primarily for the sake of stats, consider a product that will send updated information in the format of an email through the existing SMTP conduit between the DMZ and internal network. There are a number of pieces of monitoring software out there that do such a thing. They will need to be patched and updated for flaws as well.

Let's talk about some downfalls of Network C. The first downfall is that there is a single point of failure at the firewall, both in terms of security and performance. This firewall has to do a whole lot of work. For some organizations, there is not enough constant utilization for this to be a problem. For those organizations that have heavy, constant traffic, the firewall may have trouble keeping up. As well, the firewall is only a single layer unto itself. In other words, if the firewall has a vulnerability discovered in it, then a critical layer is defeated and the network is likely to be fully jeopardized. In addition, we will be talking about the need for firewalls that analyze all the way up to the application level, which makes the firewall a much lower performing contender compared to firewalls that only filter packets or run a stateful inspection of them. There are other ways to build a network; but for the vast majority of businesses out there a design like this should suffice.

For those businesses with even more stringent concerns, or bandwidth requirements that make a single firewall design not feasible, a more secure and robust network design will be discussed in the fourth and final chapter of this series.

Though we intended this series to be finished in three articles, it became apparent in the writing of this last article that we needed to separate it into two separate pieces. Hence, the last article will discuss a last design, Network D, for those with more performance and security demands, as well as a high availability feature, and the additional budget required to implement it. Nuances of this design will be discussed as well. This article will be co-written by myself and a fellow SBC employee, Ray Sun. Stay tuned.

To read The Crux of NT Security Phase Four: Network D - High Availability, High Speed, High Security, (High Cost), click here.

Aaron Sullivan is an engineer (specializing in building, securing, and penetrating the Microsoft NOS environment) for the fine, fine SBC Datacomm Security Team. SBC DataComm offers managed firewall services, IDS monitoring services, vulnerability assessment, disaster recovery, network design services, and penetration testing. If you would like to request the services of SBC's security team, you may visit the web-site (http://www.sbcdata.com/) or call (800) 433 1006 and leave the request there.

|